System Failure: 7 Shocking Causes and How to Prevent Them

Ever felt the ground shift beneath you when everything suddenly stops working? That’s the chilling reality of a system failure—silent, sudden, and often devastating. From power grids to software networks, no system is immune. Let’s dive into what really happens when systems collapse and how we can stop them before they do.

What Is a System Failure?

A system failure occurs when a complex network—be it mechanical, digital, organizational, or biological—ceases to function as intended, leading to partial or total breakdown. These failures aren’t just glitches; they represent critical vulnerabilities in design, maintenance, or human oversight. Understanding their nature is the first step toward prevention.

Defining System Failure in Technical Terms

In engineering and computer science, a system failure is formally defined as the inability of a system to perform its required functions within specified limits. This could mean a server crashing under load, a manufacturing line halting due to sensor error, or an aircraft’s navigation system malfunctioning mid-flight. The key factor is the deviation from expected operational behavior.

- Failures can be transient (temporary) or permanent.

- They may affect single components or cascade across the entire system.

- Standards like ISO/IEC 15026 define system failure in terms of reliability, availability, and maintainability (RAM).

“A system is only as strong as its weakest link.” — Herbert Sichel, reliability engineer

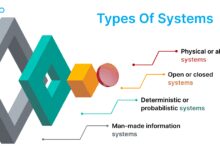

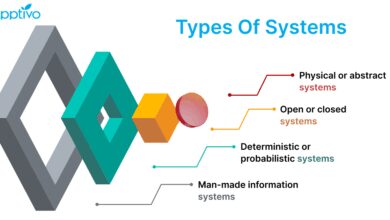

Types of System Failures

Not all system failures are created equal. They vary by origin, impact, and recovery method. Recognizing the type helps in diagnosing and mitigating future risks.

Hardware Failure: Physical components like servers, circuits, or motors break down.Example: A data center’s cooling system fails, causing servers to overheat.Software Failure: Bugs, memory leaks, or unhandled exceptions crash applications.Example: The 2021 Facebook outage was caused by a BGP routing configuration error.Network Failure: Communication links fail due to congestion, misconfiguration, or cyberattacks.Example: Distributed Denial of Service (DDoS) attacks overwhelm network capacity.

.Human-Induced Failure: Mistakes in operation, configuration, or maintenance.Example: An engineer accidentally deletes a critical database.Environmental Failure: Natural disasters like floods, earthquakes, or solar flares disrupt operations.Example: The 2011 Tōhoku earthquake caused Fukushima’s cooling systems to fail.For deeper insights into failure classification, visit ReliabilityWeb, a trusted resource for system integrity research..

Common Causes of System Failure

Behind every system failure lies a chain of causes—some obvious, others hidden in plain sight. Identifying these root causes is essential for building resilient systems.

Poor Design and Architecture

Many system failures stem from flawed initial design. When systems are built without redundancy, scalability, or fault tolerance, they’re inherently fragile.

- Lack of failover mechanisms means one component failure can bring down the entire system.

- Monolithic architectures are harder to debug and scale compared to microservices.

- Ignoring load testing during development leads to collapse under real-world stress.

For instance, the 1999 Mars Climate Orbiter disintegrated because of a unit mismatch—NASA used metric units while Lockheed Martin used imperial. A simple design oversight with catastrophic consequences.

Software Bugs and Coding Errors

Even the most sophisticated software is written by humans—and humans make mistakes. A single line of faulty code can trigger a system failure.

- Null pointer exceptions, infinite loops, and race conditions are common culprits.

- Insufficient testing, especially in edge cases, allows bugs to slip into production.

- Legacy codebases with poor documentation increase the risk of unintended side effects during updates.

The 2012 Knight Capital Group incident saw $440 million lost in 45 minutes due to a software deployment error. A forgotten flag in old code activated unintended trading algorithms.

Hardware Degradation and Obsolescence

Physical components wear out. Hard drives fail, capacitors leak, and circuits corrode. Yet, many organizations delay hardware upgrades due to cost or inertia.

- Mean Time Between Failures (MTBF) is a key metric for predicting hardware lifespan.

- Outdated equipment may not support modern security patches or software updates.

- Supply chain issues can delay replacements, increasing downtime risk.

According to a Backblaze report, hard drive failure rates spike after three years of use, emphasizing the need for proactive replacement.

System Failure in Critical Infrastructure

When system failure strikes critical infrastructure—power, water, healthcare, or transportation—the consequences are not just inconvenient; they’re life-threatening.

Power Grid Failures

Electricity is the lifeblood of modern society. When the grid fails, hospitals, communication, and transportation systems grind to a halt.

- The 2003 Northeast Blackout affected 55 million people across the U.S. and Canada due to a software bug in an Ohio utility’s monitoring system.

- Overloaded transmission lines and lack of real-time monitoring contribute to cascading failures.

- Climate change increases strain on grids through extreme weather events.

The U.S. Department of Energy emphasizes the need for smart grid technologies to prevent future outages. Learn more at energy.gov.

Healthcare System Failures

In healthcare, system failure can mean delayed diagnoses, lost patient records, or even fatal medication errors.

- EHR (Electronic Health Record) system crashes disrupt patient care.

- Medical devices like pacemakers or infusion pumps can fail due to firmware bugs.

- During the 2020 pandemic, overwhelmed IT systems in hospitals led to data bottlenecks and communication breakdowns.

A 2021 study by the National Institutes of Health found that 30% of medical device recalls were due to software issues.

Transportation and Aviation Failures

From air traffic control systems to autonomous vehicles, transportation relies heavily on flawless system performance.

- The 2019 grounding of Boeing 737 MAX aircraft was due to a flawed MCAS system that relied on a single sensor.

- Train signaling system failures have caused deadly collisions, such as the 2015 Philadelphia Amtrak derailment.

- GPS spoofing and jamming pose emerging threats to navigation systems.

The FAA now mandates stricter software validation processes for avionics systems to prevent future system failure tragedies.

Cybersecurity and System Failure

In the digital age, system failure is increasingly caused not by accidents, but by malicious intent. Cyberattacks can cripple systems in seconds.

Ransomware Attacks

Ransomware encrypts critical data, demanding payment for its release. These attacks often exploit weak security protocols.

- The 2021 Colonial Pipeline attack disrupted fuel supply across the U.S. East Coast.

- Attackers gained access through a single compromised password on a legacy VPN system.

- Recovery costs include not just ransom, but downtime, legal fees, and reputational damage.

The FBI advises organizations to implement zero-trust architectures and regular backups to mitigate ransomware risks.

Distributed Denial of Service (DDoS)

DDoS attacks flood systems with traffic, overwhelming servers and causing outages.

- Attackers use botnets—networks of infected devices—to generate massive traffic volumes.

- Cloudflare reports that DDoS attacks increased by 78% in 2022 compared to the previous year.

- Financial institutions, gaming platforms, and government sites are frequent targets.

For real-time DDoS mitigation strategies, visit Cloudflare’s DDoS guide.

Insider Threats

Not all threats come from outside. Employees or contractors with access can intentionally or accidentally cause system failure.

- Motivations include revenge, financial gain, or espionage.

- Accidental data deletion or misconfiguration is more common than malicious acts.

- Monitoring user activity and enforcing least-privilege access can reduce risk.

A 2023 Verizon Data Breach Investigations Report found that 18% of data breaches involved internal actors.

Human Error and Organizational Failure

Even with perfect technology, human decisions can trigger system failure. Poor training, communication gaps, and organizational culture play a huge role.

Miscommunication and Coordination Breakdown

In complex systems, multiple teams must work in sync. When communication fails, so does the system.

- The 1999 Mars Polar Lander failed because two engineering teams used different measurement units.

- During crisis response, unclear command structures delay decision-making.

- Shift handovers without proper documentation increase error rates.

Checklists, standardized protocols, and cross-training can bridge communication gaps.

Lack of Training and Preparedness

Operators who don’t understand system limits or emergency procedures can make fatal mistakes.

- The 1986 Chernobyl disaster was exacerbated by operators disabling safety systems during a test.

- Simulations and regular drills improve response times during actual failures.

- Training should include both technical skills and crisis management.

NASA’s rigorous simulation programs are a model for high-stakes environments.

Organizational Culture and Complacency

A culture that ignores warnings, discourages reporting, or prioritizes speed over safety sets the stage for system failure.

- The 1986 Challenger disaster was preceded by engineers’ ignored warnings about O-ring failure in cold weather.

- “Normalization of deviance” occurs when small risks are accepted until they cause major failure.

- Leaders must foster psychological safety so employees can report issues without fear.

Dr. Diane Vaughan, who studied the Challenger case, coined the term “normalization of deviance” to describe how organizations slowly accept risky behavior.

Preventing System Failure: Best Practices

While no system is 100% failure-proof, robust strategies can drastically reduce risk and improve resilience.

Implement Redundancy and Failover Systems

Redundancy ensures that if one component fails, another takes over seamlessly.

- Data centers use redundant power supplies, cooling, and network paths.

- Cloud platforms like AWS offer multi-AZ (Availability Zone) deployments for high availability.

- RAID configurations protect against disk failure in storage systems.

Google’s global infrastructure is designed with redundancy at every level, ensuring 99.99% uptime for critical services.

Regular Maintenance and Monitoring

Proactive maintenance catches issues before they escalate.

- Use monitoring tools like Nagios, Prometheus, or Datadog to track system health.

- Schedule regular patching, updates, and hardware inspections.

- Log analysis helps detect anomalies before they cause failure.

According to Gartner, organizations that perform regular system audits reduce unplanned downtime by 40%.

Conduct Risk Assessments and Simulations

Anticipating failure is half the battle. Risk assessments identify vulnerabilities before they’re exploited.

- FMEA (Failure Mode and Effects Analysis) is a structured approach to evaluating potential failure points.

- Red team exercises simulate cyberattacks to test defenses.

- Disaster recovery drills prepare teams for real-world outages.

The U.S. Department of Homeland Security conducts annual Cyber Storm exercises to test national response capabilities.

Recovering from System Failure

When prevention fails, recovery becomes critical. The speed and effectiveness of response determine the extent of damage.

Incident Response Planning

A well-defined incident response plan minimizes chaos during a crisis.

- Steps include identification, containment, eradication, recovery, and post-mortem analysis.

- Assign clear roles: incident commander, communications lead, technical lead.

- Use frameworks like NIST SP 800-61 for standardized response protocols.

The 2017 WannaCry ransomware attack could have been less damaging if more organizations had updated their systems and had response plans in place.

Data Backup and Restoration

Backups are the last line of defense against data loss.

- Follow the 3-2-1 rule: 3 copies of data, on 2 different media, with 1 offsite.

- Test restoration procedures regularly—many backups fail when needed.

- Immutable backups protect against ransomware encryption.

Backblaze and AWS S3 Glacier offer cost-effective, secure backup solutions for enterprises.

Post-Mortem Analysis and Continuous Improvement

After a system failure, a blameless post-mortem helps prevent recurrence.

- Focus on processes, not people.

- Document root causes, timeline, and lessons learned.

- Implement corrective actions and track their effectiveness.

GitHub’s public post-mortems are a gold standard in transparency and learning from failure.

Future of System Resilience

As systems grow more complex, so must our approaches to preventing system failure. Emerging technologies offer both challenges and solutions.

AI and Predictive Maintenance

Artificial intelligence can predict failures before they happen by analyzing patterns in system data.

- Machine learning models detect anomalies in server performance, network traffic, or sensor readings.

- Predictive maintenance reduces downtime and extends equipment life.

- AI-driven observability platforms like Dynatrace and New Relic provide real-time insights.

A McKinsey study found that AI-powered maintenance can reduce equipment downtime by up to 50%.

Blockchain for System Integrity

Blockchain’s decentralized, immutable ledger can enhance system transparency and trust.

- Secure logging of system events prevents tampering.

- Smart contracts can automate failover processes.

- Useful in supply chain, healthcare, and voting systems where integrity is critical.

Estonia uses blockchain to secure its national health records, reducing the risk of system failure due to data corruption.

Quantum Computing and New Threats

While quantum computing promises breakthroughs, it also threatens current encryption methods.

- Quantum computers could break RSA encryption, compromising secure communications.

- Post-quantum cryptography is being developed to counter this threat.

- Organizations must prepare for a future where today’s secure systems become vulnerable.

NIST is leading the transition to quantum-resistant algorithms, with standards expected by 2024.

What is the most common cause of system failure?

The most common cause of system failure is human error, particularly in configuration, maintenance, or response procedures. However, software bugs and hardware degradation are also leading contributors, especially in complex digital systems.

How can organizations prevent system failure?

Organizations can prevent system failure by implementing redundancy, conducting regular maintenance, performing risk assessments, training staff, and adopting robust incident response plans. Proactive monitoring and a culture of safety are equally critical.

What is a cascading system failure?

A cascading system failure occurs when the failure of one component triggers failures in other parts of the system, leading to a widespread collapse. This is common in power grids and networked IT systems.

Can AI prevent system failure?

Yes, AI can help prevent system failure by analyzing vast amounts of operational data to predict anomalies and recommend preemptive actions. However, AI systems themselves must be carefully designed to avoid introducing new failure points.

What should you do immediately after a system failure?

Immediately after a system failure, activate your incident response plan: contain the issue, assess the impact, restore critical functions, and begin data recovery. Communication with stakeholders and documentation are essential for recovery and analysis.

System failure is not a matter of if, but when. From flawed designs to cyberattacks, the triggers are many, but the solutions are within reach. By understanding root causes, investing in resilience, and learning from past mistakes, we can build systems that withstand the unexpected. The future belongs to those who prepare—not just for success, but for failure.

Further Reading: